Universal detector recognizes generated images

9. März 2020Universal detector recognizes generated images

New York, 9.3.2020

UC Berkeley and Adobe Research have introduced a “universal” detector that can distinguish real images from generated images, regardless of the architectures and / or data sets used for the training.

Today’s AI-driven imaging techniques based on GANs (generative opposing networks) and other methods can produce incredibly realistic images that are known to be difficult to distinguish from real images. This imaging technology can pose a serious threat to society because fake images can affect people’s views of the world and can manipulate public opinion and even elections. In the wrong hands, this technology can also be used as a harassment tool and affect AI security and security systems.

However, identifying AI-generated images is technically challenging. Although recognizing an image created by a particular technique can be relatively easy, such approaches lack generalization because the imaging methods with different training data sets, network structures, loss functions and even image preprocessing methods are very different. It remains a challenge for a classifier trained to recognize an approach to be effective on images created by other models.

To address this, UC Berkeley and Adobe Research have introduced a “universal” detector that can distinguish real images from generated images, regardless of the architectures and / or data sets used for the training.

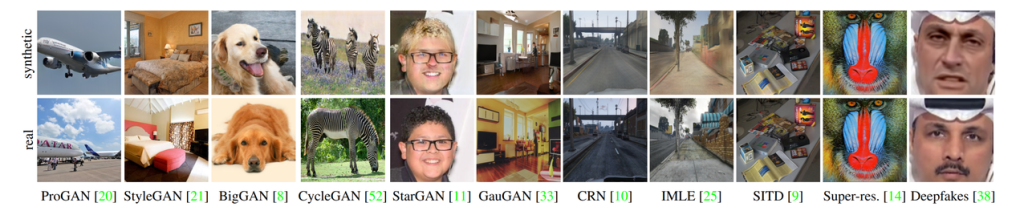

The researchers collected 11 different CNN-based image generator models – mostly GANs – which are by far the most common design for generative CNNs. They initially used ProGAN to train a universal classifier and tested it against the other CNN-based image generators.

The test results show that forensic models trained on CNN-generated images can be generalized to other random CNN models. The researchers also discovered that data enhancement is critical to both generation and diversity of training images. Using the example of BigGAN – with extensions, the average precision value (AP) improves significantly from 72.2 to 88.2. The performance for other models (CycleGAN, GauGAN) was also improved (84.0 to 96.8 and 67.0 to 98.1). Generally, training with augmentation helps improve performance.

The researchers also found that AP improves as the number of classes used increases from 2 to 16. However, this only applies to a certain point, as there is only a minimal improvement if the number of classes is increased from 16 to 20.

The CNN-generated paper is surprisingly easy to recognize: arXiv