AI chipmaker Cerebras Systems to become chief neural network trainer

1. September 2021AI chipmaker Cerebras Systems to become chief neural network trainer

San Francisco, Sept. 1, 2021

AI chipmaker Cerebras Systems announced that its hardware can support a neural network with 120 trillion parameters – 100 times what is available today. The startup unveiled a new architecture that supports AI models larger than the human brain, which has about 100 trillion synapses.

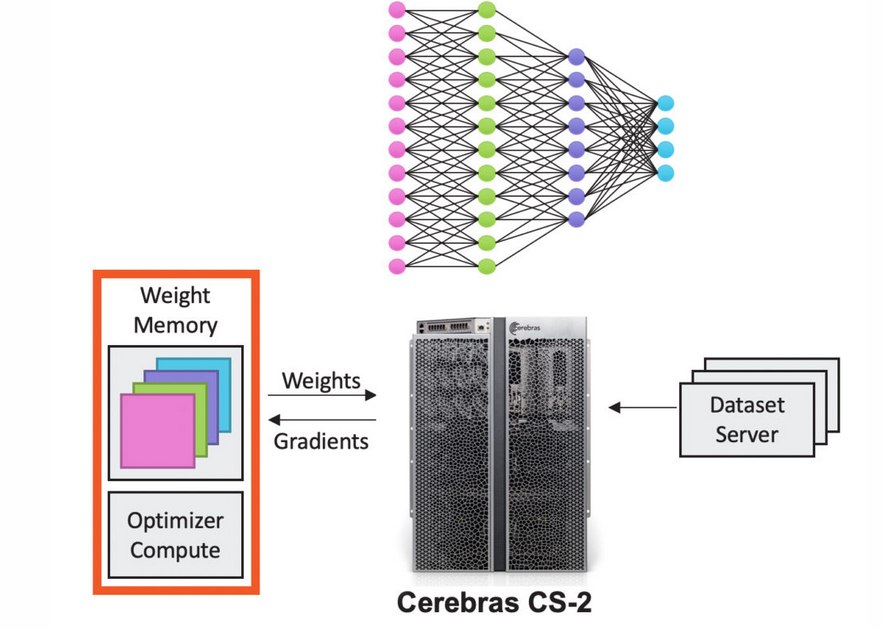

Cerebras has laid the groundwork for a „monumental leap in the scale and potential of AI models,“ TechRadar reports. Cerebras‘ CS-2 computer is designed to rapidly train larger neural networks. The CS-2’s processor is the Wafer Scale Engine (WSE-2), the fastest AI processor and largest computer chip ever built.

A single wafer-scale WSE-2 chip contains 2.6 trillion transistors and 850,000 AI-optimized cores.

With the introduction of new technologies called Weight Streaming and MemoryX, a single CS-2 can now train a neural network with up to 120 trillion parameters. More parameters means a more sophisticated AI model.

The largest graphics processor, by comparison, has 54 billion transistors. They „typically reach a maximum of 1 trillion parameters.“ Most of today’s largest AI models are powered by GPU clusters.

Cerebras also showcased its SwarmX hardware, which allows up to 192 CS-2s to work in tandem on a network, speeding up AI training.

The Silicon Valley-based startup has raised $475 million so far. It has already signed deals with GlaxoSmithKline and AstraZeneca, which are using its chips to research new drugs.