„Text-to-picture models are exciting tools for inspiration and creativity.“

30. Juni 2022„Text-to-picture models are exciting tools for inspiration and creativity.“

San Francisco, 6/30/2022

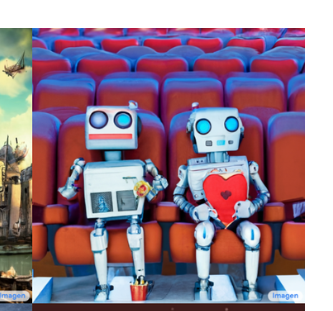

Researchers at search engine giant Google recently announced they have developed a novel machine learning technology called text-to-image generation using a range of AI techniques. These models can generate high-quality, photo-realistic images from a simple text input.

After numerous tests, they were able to present two new text-to-image models – Imagen and Parti. Both are capable of generating photorealistic images, but use different approaches.

How text-to-image models work

With text-to-image models, users enter a text description, and the models generate images that match that description as closely as possible. This can be something as simple as „an apple“ or „a cat. But more complex details, such as „there’s a bright, golden glow coming out of the chest,“ can also be processed.

The researchers mention that „ML models were trained on large image datasets with corresponding text descriptions.“ This, they say, improved the quality of the images and led to a broader range of descriptions . The Ägooge lians call DALL-E 2 from Open AI a significant breakthrough.

The researchers describe how Imagen and Parti work this way:

„Imagen and Parti are an advancement and build on earlier models. Transformer models are able to process words in relation to each other in a sentence. They are the basis for how we represent text in our text-image models. Both models also use a new technique that helps generate images that better match the text description. While Imagen and Parti use similar technology, they follow different but complementary strategies.

Imagen is a diffusion model that learns to convert a pattern of random points into images. These images start at a low resolution and then gradually become higher and higher resolution. Recently, diffusion models have been successful in both image and audio tasks, such as improving image resolution, recoloring black-and-white photos, editing image regions, cropping images, and synthesizing text into speech.

Parti’s approach first converts a collection of images into a sequence of code entries, much like puzzle pieces. A given text prompt is then translated into these code entries, and a new image is created. This approach takes advantage of existing research and infrastructure for large language models like PaLM, and is critical for processing long, complex text inputs and generating high-quality images.“

Still: these models are by no means perfect. The researchers point out that they „cannot reliably determine the number of objects (e.g., „ten apples“) or their correct placement based on specific spatial descriptions (e.g., „a red ball to the left of a blue block with a yellow triangle on it“).“ They also respond incorrectly to more complex prompts.

/

„Text-to-picture models are exciting tools for inspiration and creativity.“ But let there be no mistake. There are, in fact, „risks related to disinformation, bias, and security.“

That’s why Google– researchers are „discussing responsible AI practices and the steps necessary to use this technology safely. As a first step, we are using easily identifiable watermarks to ensure that people can always recognize an image generated by Imagen or Parti. We also conduct experiments to better understand model biases, such as in the representation of people and crops, and explore possible remedies. Imagen and Parti papers discuss these issues in detail.“

What’s next for text-image models at Google?

„We’ll be pushing new ideas that combine the best of both models and looking at related tasks, such as the ability to create and manipulate images interactively through text. We also continue to conduct in-depth comparisons and evaluations to meet our principles for responsible AI. Our goal is to bring user experiences based on these models into the world in a safe, responsible, and creativity-enhancing way.“