With ImageBind complex scene representation should succeed in the future

12. Mai 2023With ImageBind complex scene representation should succeed in the future

San Francisco, 5/11/2023

Mets releases a new multimodal AI model called ImageBind as an open source tool. Although ImageBind is still in its infancy, it acts as a framework for eventually creating complex scenes and environments from one or more inputs, such as a text or image prompt.

For example, if ImageBind was fed an image of a beach, it could identify the sound of waves. Likewise, if fed a photo of a tiger along with the sound of a waterfall, the system could create a video of both.

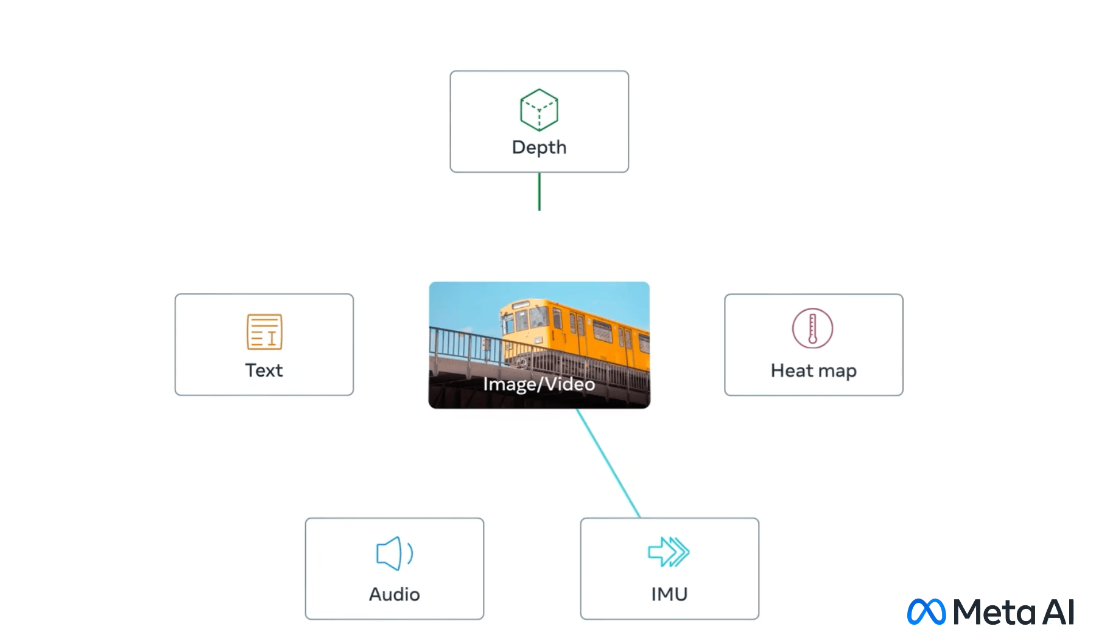

The model currently works with six data types: text, visual data (image/video), audio, depth, temperature and motion. His approach is similar to how humans can gather information through multiple senses and relate inputs between the different modes of data.

According to Meta, the model gives machines a „holistic understanding“ that links objects in a photo to their corresponding sound, 3D structure, temperature and movement.

Although Meta hasn’t released it as a product yet, ImageBind’s applications could include improving the search function for photos and videos or creating mixed reality environments. Meta plans to expand ImageBind’s data modes to other senses in the future.