Google: your AI system detects 26 skin diseases as accurately as dermatologists

16. September 2019Google: your AI system detects 26 skin diseases as accurately as dermatologists

New York, 16.9.2019

Skin diseases are among the most common diseases worldwide, directly behind colds, fatigue and headaches. It is estimated that 25% of all treatments offered to patients around the world are for skin diseases and up to 37% of patients treated at the clinic have at least one skin disease.

The enormous case load and a global shortage of dermatologists have forced patients to seek general practitioners, who are usually less accurate than specialists when it comes to identifying diseases. This trend motivated Google researchers to develop an AI system capable of detecting the most common dermatological disorders in primary care. In an article („A Deep Learning System for Differential Diagnosis of Skin Diseases“) and an accompanying blog post they claim that the detection rate of 26 skin diseases is at eye level with US-certified dermatologists.

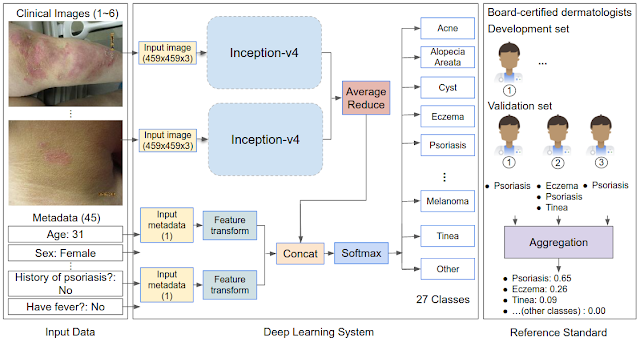

„We have developed a Deep Learning System (DLS) to treat the most common skin diseases in primary care,“ wrote Google software engineer Yuan Liu and Google Health technical program manager Dr. Peggy Bui. „This study shows the potential of DLS to improve the ability of general practitioners to diagnose skin diseases accurately without additional specialist training.

As Liu and Bui further explained, dermatologists not only provide a diagnosis for each skin problem, but also establish a ranking of possible diagnoses (differential diagnoses) that are systematically narrowed down through subsequent laboratory testing, imaging, procedures and consultations. The same applies to the Google research system, which processes inputs that include one or more clinical images of the skin anomaly and up to 45 types of metadata (e.g., self-reported components of medical history such as age, gender, and symptoms).

The research team says it has evaluated 17,777 deidentified cases from 17 primary clinics in two states for training. They had forked the corpus and used the part of the records from 2010 to 2017 to train the AI system and reserved the part from 2017 to 2018 for evaluation. During training, the model used over 50,000 differential diagnoses from over 40 dermatologists.

The comparative tests showed that the superiority of the Google system in terms of diagnostic accuracy. Thus, 3,750 cases were combined to derive the Ground Truth labels. The AI system achieved top 1 and top 3 accuracies with 71% and 93% respectively. If the system was also compared with three categories of clinicians (dermatologists, general practitioners and nurses) on a subset of the validation data set, the team reported that its three best predictions showed a diagnostic accuracy of 90%, or comparable to dermatologists (75%) and „significantly higher“ than general practitioners (60%) and nurses (55%).

To evaluate possible skin type bias, the team tested the performance of the AI system based on Fitzpatrick skin type, a scale ranging from Type I („pale white, always burns, never tans“) to Type VI („darkest brown, never burns“). Focusing on skin types representing at least 5% of the data, they found that the model’s performance was similar, with a top 1 accuracy of 69% to 72% and a top 3 accuracy of 91% to 94%.

According to Google, the success of deep learning to explain the differential diagnosis of skin diseases shows that their system could highly compensate for the lack of dermatologists.