New AI model from GPT-3 can now solve ambiguity, among other things

30. November 2022New AI model from GPT-3 can now solve ambiguity, among other things

San Francisco, Nov. 30, 2022

A new AI model in OpenAI’s GPT-3 family is capable of producing longer content, including poems and rhymed songs, at a level not achieved by its predecessors.

The „text-davinci-003“ language model performs the same tasks but requires fewer instructions than its predecessors, OpenAI said. The model is particularly suited for tasks that require deep knowledge of a particular topic, such as creative content. It shows a greatly improved ability to infer solutions and understand the intent behind the text.

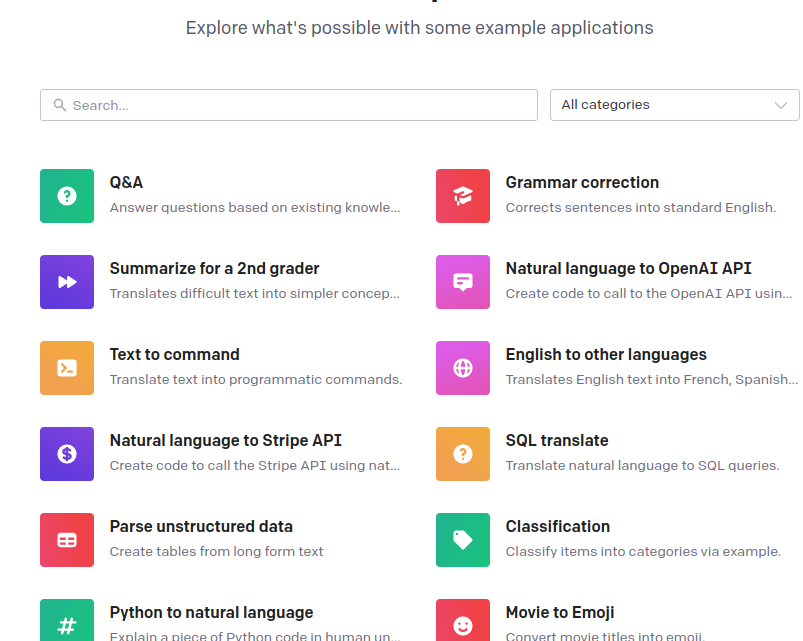

However, Davinci is slower and more expensive per API call than other GPT-3 models. Users with an OpenAI account can experiment with the GPT-3 models through the AI Lab’s Playground webpage, where they can enter instructions to generate text results for a fee.

Background:

The incredible career of BERT

Machine learning systems have excelled at the tasks for which they have been trained, using a combination of large data sets and powerful models. They are capable of performing a wide range of functions, from completing a code to creating recipes. Perhaps the most popular task is generating new text – a content apocalypse – that is no different than a human writing.

In 2018, the BERT (Bidirectional Encoder Representations from Transformers) model sparked a discussion about how ML models can learn to read and speak. Today, LLMs, or logic-learning machines, are rapidly evolving to handle a wide range of applications.

In a generation of text-to-general with incredible AI ML models, it’s important to remember that systems not only understand language, but are tuned to look like they understand it. Speaking of language, the correlation between the number of parameters and sophistication has held up remarkably well.

The BERT Model

BERT is an open source machine learning framework for natural language processing (NLP). BERT was developed to help computers understand the meaning of ambiguous language in text by using the surrounding text to establish context. The BERT framework has been trained with text from Wikipedia and can be fine-tuned with question and answer datasets.

BERT stands for Bidirectional Encoder Representations from Transformers and is based on Transformers, a deep learning model where each output element is connected to each input element and the weights between them are dynamically calculated based on their connection. (In NLP, this process is called attention).

In the past, language models could only read text input sequentially – either left-to-right or right-to-left – but not both simultaneously. BERT is designed to read in both directions simultaneously. This capability, made possible by the introduction of transformers, is called bidirectionality.

Using this bidirectional capability, BERT has been pre-trained for two different but related NLP tasks: Masked Language Modeling and Next Sentence Prediction.

The goal of Masked Language Model (MLM) training is to hide a word in a sentence and then have the program predict which word was hidden (masked) based on the context of the hidden word. The goal of the training for predicting the next sentence is to have the program predict whether two given sentences have a logical, sequential connection or whether their relationship is simply random.

Background

Transformers were first introduced by Google in 2017. At the time of their introduction, language models mainly used recurrent neural networks (RNN) and convolutional neural networks (CNN) to perform NLP tasks.

Although these models are competent, Transformers are considered a significant improvement because, unlike RNNs and CNNs, they do not require sequences of data to be processed in a specific order. Since Transformers can process data in any order, it is possible to train with larger amounts of data than was possible before their existence. This, in turn, facilitated the creation of pre-trained models such as BERT, which was trained with large amounts of speech data prior to its release.

In 2018, Google introduced BERT and made it available as open source. In its research phase, the framework achieved breakthrough results on 11 natural language understanding tasks, including sentiment analysis, semantic role labeling, sentence classification, and disambiguation of polysemous words, words with multiple meanings.

Addressing these tasks distinguishes BERT from previous language models such as word2vec and GloVe, which are limited in interpreting context and polysemous words. BERT effectively addresses ambiguity, which researchers in the field believe is the greatest challenge in natural language understanding. It is able to parse language with a relatively human-like „common sense.“

However, BERT was trained only with an unlabeled, plain text corpus (namely, the entire English Wikipedia and the Brown Corpus). It continues to learn unsupervised from the unlabeled text and improves even when used in practical applications (e.g., Google search). Its pre-training serves as a base layer of „knowledge“ upon which it can build. From there, BERT can adapt to the ever-growing amount of searchable content and queries, and be tuned to the user’s specifications. This process is referred to as transfer learning.

As mentioned earlier, BERT is enabled by Google’s research on Transformers. The transformer is the part of the model that gives BERT the increased ability to understand context and ambiguity in the language. The transformer does this by processing a particular word in the context of all the other words in a sentence, rather than processing them individually. By looking at all the surrounding words, the transformer allows the BERT model to understand the entire context of the word and thus better capture the intent of the searcher.

This is in contrast to the traditional method of language processing called word embedding, where previous models such as GloVe and word2vec map each individual word to a vector that represents only one dimension, a sliver, of the meaning of that word.

These word embedding models require large datasets of labeled data. While they are suitable for many general NLP tasks, they fail for context-heavy predictive question answering because all words are in some sense fixed to a vector or meaning. BERT uses a masked language modeling method to prevent the word in focus from „seeing itself,“ i.e., having a fixed meaning regardless of its context. BERT is then forced to identify the masked word based on context alone. In BERT, words are defined by their environment, not by a predetermined identity. In the words of English linguist John Rupert Firth, „Thou shalt know a word by the company it keeps around it.